Further Thoughts About the Progressive Enhancement Discussion

Josh Korr, Former Product Strategy Director

Article Category:

Posted on

My previous post sparked a lot of response in the comments, on Twitter, from Aaron Gustafson and Luke Whitehouse. Those responses have helped me refine my thoughts and reconsider my tone, so I want to take another stab: a less-combative approach; more clearly walking through my thought process, while dispensing with the too-clever-by-half LSAT Game stuff that Adrian Roselli fairly called out as sloppy; and trying to be a little less insufferable. You should still read that one first for context.

On Tone and Good Faith

A few initial thoughts.

In response to my post, Chip Cullen tweeted:

RE that article railing against PE:https://t.co/HfxZNkX9BG

It comes at a development mindset rooted in compassion with active disdain

— Chip Cullen (@chipcullen) December 5, 2016

I'd say intellectual-gotcha glee more than active disdain, but point taken.

Read Aaron's posts about egalitarianism and accessibility (and his response to my post); he is thoughtful and compassionate. He wants to make the web and the world better. Read this, um, strident Brad Frost post — the stridency is rooted in fierce empathy. I also realize many of these folks are a big reason for indispensable parts of the modern web: web standards, responsive web design, atomic design, accessibility.

I stand by my thinking, as we'll see, but not because I disdain Aaron or anyone else. I shouldn't take my tone in that direction, either. In this post I'll use the term "mega-PEer" instead of "hard-liner," which I hope is more neutral. Another part of changing tone is keeping perspective about the relatively trivial thing I'm actually trying to do: prove an intellectual point to help explain a discussion logjam. As many have pointed out, there are definitely more important things to be fighting for and about these days.

Also, while I was not speaking officially for Viget (we're encouraged to share our own ideas without always seeking consensus), I should have emphasized how much Viget cares about accessibility (as do I), and that I wasn't saying Viget devs ignore progressive enhancement.

It's About the Discussion, Not the Approach

The purpose of my post was not to assess progressive enhancement as an approach to web development. (Hence my not-a-dev-disclaimer.) And yes, PE is undeniably a practical approach to web development.

The purpose was to offer an explanation for the PE discussion's dynamic: how it's highly combustible and seems to go around in frustrating circles.

Here's my rephrased theory:

Mega-PEers frequently say they aren't rigid. As Aaron writes in his response: "We ... all believe that the right technical choices for a project depend specifically on the purpose and goals of that specific project. In other words it depends."

Yet mega-PEers' behavior can contradict this self-image: For example, they sometimes strongly call out developers who simply mention ideas (particularly about JavaScript) that mega-PEers disagree with.

In these cases, folks are actually thinking and talking in moral terms, but don't always realize or acknowledge that. They believe they are talking in it-depends, pragmatic ways.

This causes the discussion to go in circles.

I'm not saying progressive enhancement is inherently moral or immoral. (Believe it or not, I wasn't trying to get that philosophical.) I'm not saying mega-PEers wrote a moral philosophy on a parchment scroll 13 years ago and recite it every morning, hand on heart. I'm saying it's important to recognize there's a strong moral undercurrent to the PE discussion.

Why It's Important to Talk About Morality

Why does it matter if PE has a moral foundation, or if PE is used in part to achieve moral goals? Why am I risking such wrath and treading into such murky waters? Am I just a nihilist, lacking morals or compassion, playing lazy intellectual games for kicks and clicks?

It matters because a moral foundation, even a compassionate one, can be used to silence and shame.

It matters because when your argument is heavily a moral one, it makes people who hold different views not simply intellectual adversaries — it makes them immoral. Not "your argument is flawed," but "you're a bad person." Which makes it impossible for them to argue with you in a fair way.

It matters because moral shaming is a scorched-earth affair: When you shame people who also believe in caring about people, you’re undermining their compassion and their potential contributions to users and to the world.

And I know this is fraught, but my observation is that some PEers — who collectively mean so well, and believe in everything they’re fighting for, and are good people for fighting for what they believe in — are doing all of this to their peers.

You can see this behavior in Nolan Lawson's post, when he describes the response to a conference talk he gave:

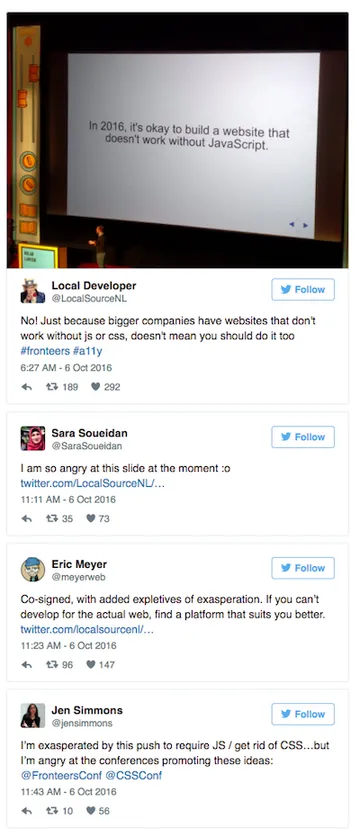

"When I showed this slide, all hell broke loose:

Lawson continues: "The condemnation was as swift as it was vocal. Many prominent figures in the web community ... felt compelled not only to disagree with me, but to disagree loudly and publicly. Subtweets and dot-replies ran rampant. As one commentator put it, ‘you’d swear you had killed a baby panda the way some people react.'"

You can see similar behavior in another recent PE flare-up. Alex Russell literally said "shrug" about some PE ideas, and Jeremy Keith responded like this:

This does not sound like an "it depends" mindset to me. (Saying "JK it depends" after the fact doesn't count.)

Rather, someone shows a conference presentation slide, and the reaction's implication is you shouldn't say that because your idea is morally bad. Someone tweets a shrug, and the reaction's implication is what you said is morally bad, and you don't care about people.

I'm not saying people are doing this intentionally, or without the best of intentions. I'm not saying everyone I quoted is doing it. But it is happening. Many responses to my post have certainly been in this vein.

Of course there are times when it's appropriate to use morals to shame. But if you're going to do it, you should take care (a) that the person you're shaming doesn't also have the same or an equally valid moral foundation, and (b) that your moral ideas are thoroughly considered.

Is It Really About Morals?

@SaraSoueidan so wouldn't want to write off such an article, even if it shows a lack of understanding. Knowing our audience and all that

— Father Smithmas 🌈🎄 (@rachsmithtweets) December 5, 2016

But back up: Do morals really play as strong a part as I claim?

Well, consider the alternative: that practical considerations are behind this behavior.

A primary practical reason given for PE is it accounts for the ~1% of visits/page loads when JavaScript doesn't run, which could happen for voluntary (user doesn't have JS) or non-voluntary (JS fail, rando site fail) reasons. This affects a random sampling of page loads — it's not, say, a group of users with disabilities, so isn't an issue of equal access.

In his response, Aaron offers other practical reasons for taking a PE approach: reliability, reach, development costs, general (non-a11y-related) usability.

Could any of these explain the strong reactions we see?

Take the 1% failure consideration. There are many contexts where there's an inherent interest in addressing the 1% failures or avoiding them in the first place: government sites, community organization sites, .edus. (I should have been clearer in the first post that I recognize these contexts exist.) But in many other contexts (business or consumer software, entertainment, advertising), addressing the 1% is just a business decision for a given site, right?

As for the other practical considerations, do you think the prospect of Random Business X increasing its customer base is what's motivating this?

I don't.

So why do PE discussions turn out this way?

The answer I landed on: There has to be a moral, philosophical component to it. Nothing else could inspire such passionate reactions.

Indeed, as I showed in my first post, you can see moral concerns suffusing PEers' writing:

"Let’s accept that network connections are unevenly distributed. Let’s also accept that browser features are unevenly distributed. Pretending that millions of Opera Mini users don’t exist isn’t a viable strategy. They too are people who want to communicate, to access information, to be empowered, and to love." — Jeremy Keith

“The minute we start giving the middle finger to these other platforms, devices and browsers is the minute where the concept of The Web starts to erode. Because now it’s not about universal access to information, knowledge and interactivity. It’s about catering to the best of breed and leaving everyone else in the cold.” — Brad Frost

So it doesn't take a tortured LSAT Game thought exercise to say that when Jeremy Keith says this ...

@slightlylate Yeah, fuck those Opera Mini users. Right, @brucel?

— Jeremy Keith (@adactio) June 4, 2016

... He's probably not talking about lower development costs.

Moving the Discussion Forward

By all means, let's propose new moral causes and ideas. But when we do, let's apply more nuance and rigor.

Be mindful of conflating practical considerations (performance, reliability, business opportunity) with moral ones (accessibility), "it depends" with "it's morally necessary." Be mindful of claiming ownership of a broadly definable ethos like "caring about people is good." Be mindful of conflating existing, vetted moral ideas (accessibility, net neutrality, right to internet access) with other, loosely articulated ones. When proposing the latter, unpack the ideas; follow the logic through to its ends, see if it holds up. Let others test and question the logic, as I did (albeit harshly) in my first post. That process is what gave net neutrality and other causes their strong, lasting foundations.

A couple of Aaron's other articles illustrate the often-subtle difference between "it depends" and "it's morally necessary."

In "Developing Dependency Awareness," he writes: "If you don’t consider the possibility a given dependency might not be met, you run the risk of frustrating your users. You might even drive them into the arms of your competition. So be aware of dependencies. Address them proactively." (Emphasis added.)

This is a pragmatic framing: dependencies are a business risk, not a moral one, so ultimately up to a given site's creator. He still invokes his overarching moral goal — sites should be "usable by the greatest number of people in the widest variety of scenarios" — without implying that falling short of that goal causes moral harm. That's truly an "it depends" mindset. (And of course, certain contexts, e.g. a government site, can change the it-depends equation.)

Compare that to "Progressive Misconceptions," where Aaron writes: "The progressive enhancement camp needs to concede that all JavaScript is not evil, or even bad — JavaScript can be a force for good and it’s got really solid support. The JavaScript camp needs to concede that ... we can never be absolutely guaranteed our JavaScript programs will run." (Emphasis added.)

Here we're back to unspoken moral territory. Aaron's not really asking the JS camp to concede that JS may not run. They readily concede that. He's asking them to concede that this risk matters in more than an it-depends way.

In "Progressive Misconceptions," as in his response to my post, Aaron is being very nice and trying hard to bridge the gap. But that gap will be unbridgeable as long as "it depends" and "it's morally necessary" are so casually interchanged. Aaron's other, more pragmatic words point the way forward.