Building a Simple API with Amazon Lambda and Zappa

Ian Brennan, Former Developer

Article Categories:

Posted on

AWS Lambda and Zappa make deployment easy and fast, plus you don't have to worry about provisioning servers.

We recently had a client come to us with a request for a simple serverless API. They wanted little to no administrative overhead, so we went with the AWS Lambda service. It was my first foray with Lambda, and getting it set up came with its fair share of headaches. If you're starting down the same path and want to build a simple API with Lambda, here's a tutorial to help.

Github #

If you would rather go through the tutorial on github, you can find it here

AWS Lambda #

This is a great service offered by AWS that allows users to run a serverless application or function. It's a cloud-based, serverless architecture that comes with continuous scaling out of the box. Deploy your code, and AWS does the rest. It will only run when "triggered," either by another AWS service, or an HTTP call. It's relatively young and has room to grow, but for simple applications and more complex functions it works really well.

Zappa #

Zappa is a wonderful python package created by Miserlou, otherwise known as Rich Jones from gun.io. It saved me a lot of time and allows users to deploy code to Lambda with minimal configuration with just one command from the CLI.

Alright, let's get started.

brew update

brew install python 3Always good to upgrade your essential packages:

pip install --upgrade pip setuptools pipenvWe'll be using a Pipfile to manage dependencies, instantiate the pipfile and venv like this:

pipenv --three

pipenv shellyou should have a working virtual environment and pip file now.

Next, install zappa and flask, flask is a lightweight framework for python applications.

pipenv install zappa flaskif you do not have the awscli installed:

pipenv install --dev awsclithis will help us get aws set up to play nicely with zappa.

open it up in your favorite text editor.

app.py #

# app.py

from flask import Flask

app = Flask(__name__)

# here is how we are handling routing with flask:

@app.route('/')

def index():

return "Hello World!", 200

# include this for local dev

if __name__ == '__main__':

app.run()Now you have a tiny little app ready to go, let's run it in the terminal:

export HELLO_WORLD = app.py

flask runand check out your localhost at port 5000 and you should be welcomed by a little "Hello World!"

If you haven't already, you'll need to configure your awscli by following these amazon docs.

Deployment with Zappa #

zappa initthis will create your zappa_settings.json file and add it to the project, now you can deploy! This is what makes zappa so nice, it takes this tiny config and then packages and deploys with one command. You can check out more advanced settings to add into your project here: advanced zappa settings

zappa deployif you have any trouble with deploy on this one, such as seeing:

{

"message": "internal server error"

}when you try to view your site, check cloudwatch, if your logs have something like:

Unable to import module 'handler': No module named builtinsthere is most likely a difference between your local python version and pipenv's version, so double check that and re-deploy, using different versions on pipenv is as as easy as:

pipenv --two# app.py

from flask import Flask, Response, jsonAdding a get route is pretty straightforward with flask, let's add one for getting a fake user:

# app.py

@app.route('/user', methods=["GET"])

def user():

resp_dict = {"first_name": "John", "last_name": "doe"}

response = Response(json.dumps(resp_dict), 200)

return responselet's run that locally and check that everything is A-OK.

export FLASK_APP=app.py

flask runnavigate to http://localhost:5000/get-user you should see:

{

"first_name": "John",

"last_name": "Doe"

}and that's it, we can update or zappa function now with:

zappa updatePost #

we'll need request from flask for this so add it to the import:

# app.py

from flask import Flask, Response, json, requestadd the post method to your methods list and adjust your route function:

# app.py

@app.route('/user', methods=["GET", "POST"])

def user():

resp_dict = {}

if request.method == "GET":

resp_dict = {"first_name": "John", "last_name": "doe"}

if request.method == "POST":

data = request.form

first_name = data.get("first_name", "")

last_name = data.get("last_name", "")

email = data.get("email", "")

resp_dict = {"first_name": first_name, "last_name": last_name, "email": email}

response = Response(json.dumps(resp_dict), 200)

return responseyou can run this locally and hit it with postman or another GUI you like for making requests, just make sure you are requesting with form-data or it won't work. Once everything is good, zappa update and try out post and get on the Lambda function

aws rds create-db-instance \

--db-instance-identifier <db identifier, nothing special here> \

--db-instance-class db.t2.micro \

--engine MySQL \

--allocated-storage 5 \

--no-publicly-accessible \

--db-name <Name you want to give your database> \

--master-username <this is db specific so use w/e you want> \

--master-user-password <same as above, some symbos won't be accepted> \

--backup-retention-period 3You should be seeing some output to the console showing your new db and its configuration. I'd also recommend booting up a local mysql database for testing, you can update the Lambda function every time you want to debug but that gets a bit tedious.

Setting Environment Variables #

Zappa makes this pretty easy, I don't really like the idea of putting it into my zappa_settings.json file, so I opted to use .env with the dotenv package instead, let's add the dependencies we'll need and import them first

Make sure you are not in your virtual env when installing dependencies

pipenv install dotenvboot your venv back up:

pipenv shelland import.

# app.py

import os

import logging

from dotenv import load_dotenv, find_dotenvAlright, in your env we'll need to add a couple variables to get things working with the new DB. First create a .env file then add these:

DB_HOST=<address of your new db, you can get this from the aws rds console, it will be the endpoint value when you expand your db information>

DB_USERNAME=<username you set in the cli command>

DB_PASSWORD=<password you set in the cli command>

DB_NAME=<name you set in the cli command>I also recommend creating a .dev.env file with the same values but setup for your local mysql database. Let's get those and set them to global variables in app.py:

# app.py

# first, load your env file, replacing the path here with your own if it differs

# when using the local database make sure you change your path to .dev.env, it should work smoothly.

dotenv = Dotenv(os.path.join(os.path.dirname(__file__), ".env"))

# update environment just in case

os.environ.update(dotenv)

# set globals

RDS_HOST = os.environ.get("DB_HOST")

RDS_PORT = int(os.environ.get("DB_PORT", 3306))

NAME = os.environ.get("DB_USERNAME")

PASSWORD = os.environ.get("DB_PASSWORD")

DB_NAME = os.environ.get("DB_NAME")

# we need to instantiate the logger

logger = logging.getLogger()

logger.setLevel(logging.INFO)now let's create a connect function for connecting to our new database:

def connect():

try:

cursor = pymysql.cursors.DictCursor

conn = pymysql.connect(RDS_HOST, user=NAME, passwd=PASSWORD, db=DB_NAME, port=RDS_PORT, cursorclass=cursor, connect_timeout=5)

logger.info("SUCCESS: connection to RDS successful")

return(conn)

except Exception as e:

logger.exception("Database Connection Error")and add the call to connect in our index function for testing purposes:

# app.py

@app.route('/')

def index():

connect()

return "Hello World!", 200AWS VPC Configuration #

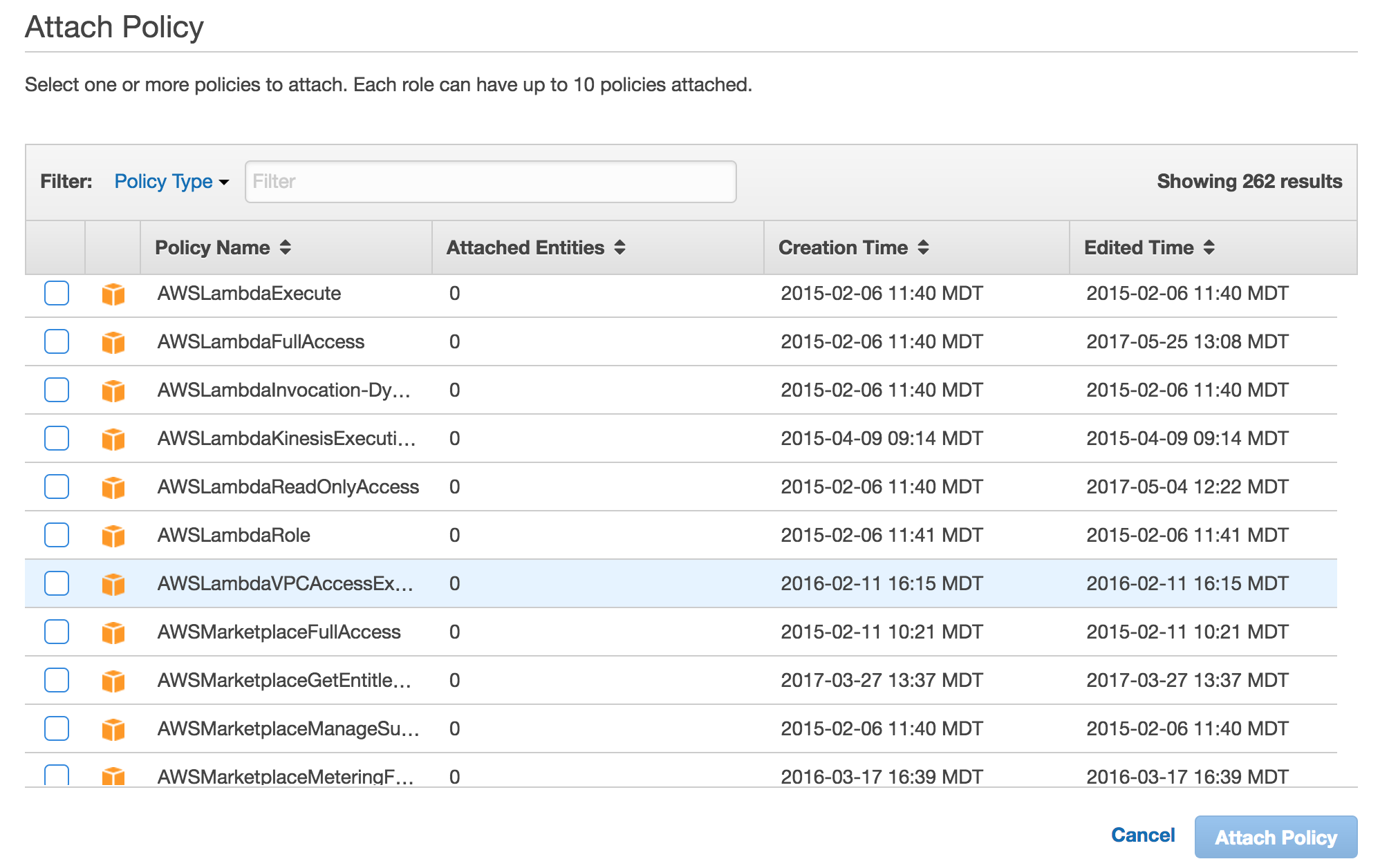

Before we run this though, we'll need to create an IAM role capable of making this connection through VPC. Sign in here. Since we have deployed before, you should see the role zappa automatically created for us. It should have "ZappaLambdaExecutionRole" at the very end of the title, click on that role.

You'll then see something like this:

Click attach policy and inside the policy list select AWSLambdaVPCAccessExecutionRole:

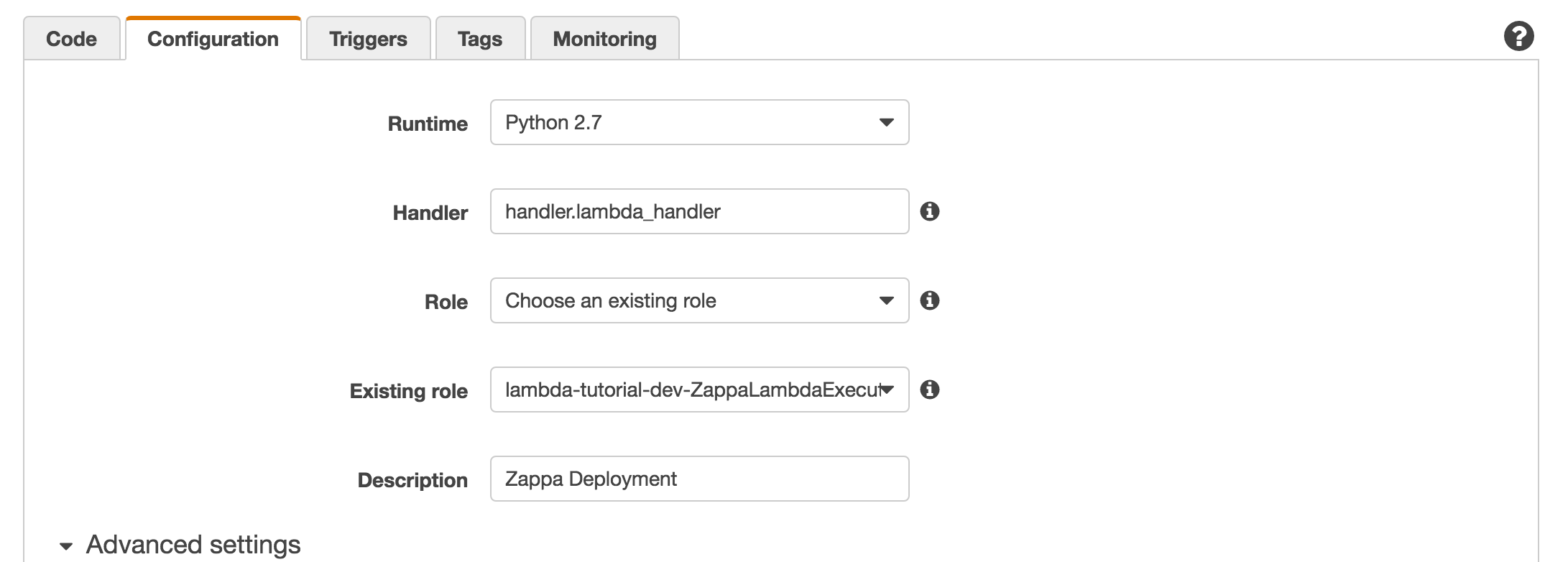

OK, now our role should be good to go for interacting with our RDS on the default VPC. Let's configure the Lambda so it can connect to the RDS instance. Open up your Lambda console by heading here. Open up the Lambda function you've deployed already, and click into the configuration tab:

For role, select choose existing role, for existing role, enter in the name of the role we just created, then open up the advanced settings dropdown:

At this point I just selected my default VPC in VPC, added three of the associated subnets, and added the default security group in security groups. This should give your Lambda function access to the VPC where your RDS instance lives, otherwise we will just get timeouts when we try to write and read from the database.

Press save and test, the output is generally useless here but it shouldn't throw any weird errors. Now deploy the app:

zappa updateand tail

zappa tailYou should see your log for a successful connection to the RDS instance, if not you may want to go back over the amazon steps, or open an issue on my repo.

Building the user table #

For the purposes of this tutorial, I'm going to just build a GET endpoint that will do the work for us. Since the Lambda already lives on the same VPC as the RDS instance we created it's easy to make that connection here, for future, and more reliable projects, I recommend creating a VPC that can handle an ssh connection from ec2. These tutorials will get you there VPC AWS tutorial & ec2 ssh tutorial for now we will just be making the table via the Lambda for simplicity. I do recommend trying these queries on your local mysql db before deploying with zappa as debugging will be faster and easier to correct.

First, remove the connect function call from the index endpoint. We no longer need to make that connection. Next let's add a build_db function:

# app.py

def build_db():

conn = connect()

query = "create table User (ID varchar(255) NOT NULL, firstName varchar(255) NOT NULL, lastName varchar(255) NOT NULL, email varchar(255) NOT NULL, PRIMARY KEY (ID))"

try:

with conn.cursor() as cur:

# just in case it doesn't work the first time let's drop the table if it exists

cur.execute("drop table if exists User")

cur.execute(query)

conn.commit()

except Exception as e:

logger.exception(e)

response = Respone(json.dumps({"status": "error", "message": "could not build table"}), 500)

finally:

cur.close()

conn.close()

response = Response(json.dumps({"status": "success"}), 200)

return responseand the endpoint we'll use to hit it:

# app.py

@app.route('/build', methods=["GET"])

def build():

return build_db()# app.py

def build_response(resp_dict, status_code):

response = Response(json.dumps(resp_dict), status_code)

return responseNext, we're going to need to implement the post request response inside of our user function

# app.py

def user():

conn = connect()

if request.method == "GET":

# respond to get, coming soon

if request.method == "POST":

data = {

"first_name": request.form.get("first_name", ""),

"last_name": request.form.get("last_name", ""),

"email": request.form.get("email", "")

}

valid, fields = validate(data)

if not valid:

error_fields = ', '.join(fields)

error_message = "Data missing from these fields: %s" %error_fields

return build_response({"status": "error", "message": error_message}, 400)

query, vals = insert(data)

try:

with conn.cursor() as cur:

cur.execute(query, vals)

conn.commit()

except Exception as e:

logger.exception("insert error")

return build_response({"status": "error", "message": "insert error"}, 500)

finally:

conn.close()

cur.close()

return build_response({"status": "success"}, 200)This function is doing a few things: first we take our form data from the request and create a more digestible data dictionary to work with, then we validate that data in preparation for persistence. If it's valid, we are going to attempt to insert it into our database. If successful, we close our db cursor and connection then respond with a success message. If not, we respond with a log of our error as well as an error HTTP response.

As you can see, we're missing our insert function and our validate function,

# app.py

def validate(data):

error_fields = []

not_null = [

"first_name",

"last_name",

"email"

]

for x in not_null:

if x not in data or len(data[x]) == 0:

error_fields.append(x)

return (len(error_fields) == 0, error_fields)This returns a boolean letting us know if our data is valid as well as a list of fields that are missing from our data. Next, we need to write a simple insert query:

# app.py

def insert(data):

uniq_id = str(uuid5(uuid1(), str(uuid1())))

query = """insert into User (ID, FirstName, LastName, Email)

values(%s, %s, %s, %s)

"""

return (query, (uniq_id, data["first_name"], data["last_name"], data["email"]))Update your function,

zappa updateNow we should be able to post to our Lambda function user endpoint, I used postman to do so but anything works, and we should see a success callback.

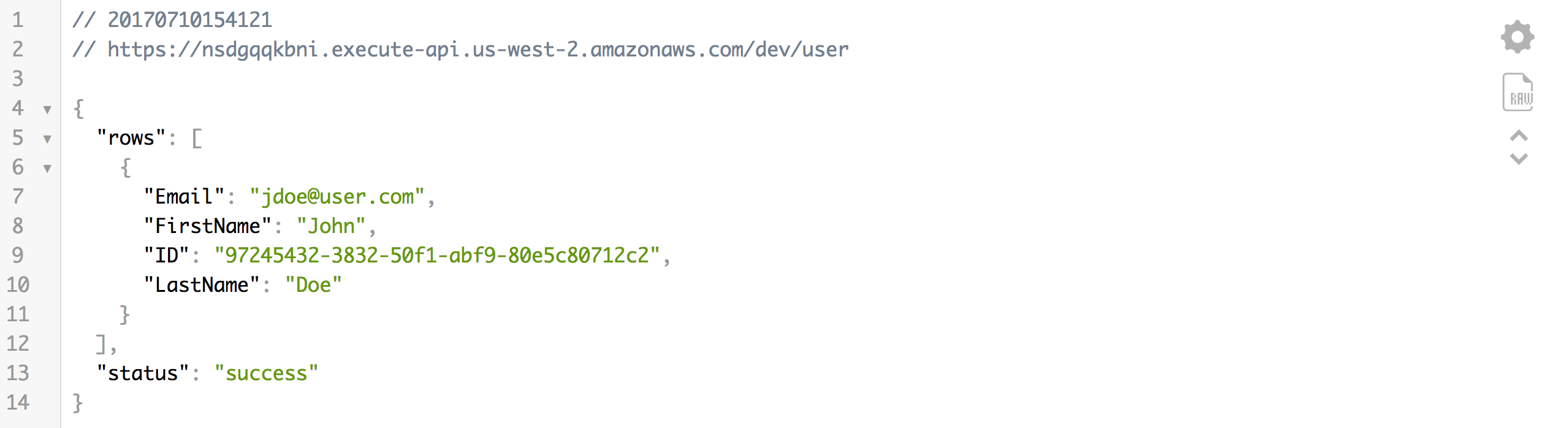

Get request for users in RDS #

For the get request we just want to return a json representation of our user table, this can be achieved easily by adding get request handling to our user function:

# app.py

def user():

conn = connect()

if request.method == "GET":

items = []

try:

with conn.cursor() as cur:

cur.execute("select * from User")

for row in cur:

items.append(row)

conn.commit()

except Exception as e:

logger.info(e)

response = build_response({"status": "error", "message": "error getting users"}, 500)

return response

finally:

conn.close()

response = build_response({"rows": items, "status": "success"}, 200)

return responseUpdate and test that your function works.

I recommend getting a json prettifier extension like json-viewer to see an easily digestable representation of your database within your browser. Once you have an extension enabled visit your user endpoint in your browser and you should be seeing your user table in json form like so:

Adding CORS handling to your Lambda function #

CORS is what gave me the most trouble, and is ultimately necessary if you ever want to hit your function from a client-side app. I tried using aws's automated CORS setup, I tried wrapping the app with the flask CORS package. All of these presented their fair share of headaches and since zappa sets your function up as a proxy it's a lot more simple to just hand roll your CORS headers and Options preflight request handling. The new version of zappa may have an integrated way to handle this but I found that doing it yourself is really straightforward and requires no dependencies

CORS headers #

All I had to do to add CORS headers is add two lines to my build_response function:

# app.py

def build_response(resp_dict, status_code):

response = Response(json.dumps(resp_dict), status_code)

response.headers["Access-Control-Allow-Origin"] = "*"

response.headers["Access-Control-Allow-Headers"] = "Content-Type"

return responseHandling preflight, or options requests. #

When a Cross Origin Request comes in from an application it will first hit your function with an Options request to do a pre-flight check and make sure there's nothing malicious going on, we'll need to handle that by adding a few things to our user function:

# app.py

def user():

if request.method == "OPTIONS":

return build_response({"status": "success"}, 200)That's it – your app should be good to go as far as CORS are concerned. I would recommend only allowing requests from certain sites though instead of allowing any origin to make a request to your function. That'll be covered in this branch.

Useful resources #

I found the following resources really helpful while building this application and writing this tutorial: