The Great Estimating Experiment: How We Reignite Scrutiny in Estimating

At every agency, time=money. So, keeping people busy on billable project work is critical to the bottom line. One of my hats at Viget is “Resource Manager.” Some agencies refer to this role as “Traffic Manager.” At its core, it’s the art of scheduling billable staff on projects to maximize utilization and team efficiency.

A major factor in keeping people billable is estimating the hours they’ll need over time for each project. This post will discuss the reasons why our estimates aren’t always accurate and describe a recent experiment that helped improve this accuracy.

Considerations in Estimating Hours

Juggling project assignments across a team here at Viget includes considerations such as:

- Availability

- Location

- Skill set

- Alignment with career goals and interests

- Client match

- Team composition

- Most recent projects.

We sell projects under assumptions regarding timeline and scope of work that dictate the general pace of a project. This initial understanding allows us to identify and commit team members to a project over a specified period of time (i.e., helping us see the “big picture”). This resourcing plan shifts week-to-week, however, once the project is underway because of:

- Unplanned unavailability on either our side or the client side

- Faster/slower production time

- Faster/slower client decisions/approvals

- Changes in scope

- Changes in delivery deadline.

Viget’s Process

As a result, we rely on our project managers to update their project staffing plans on a weekly basis (enabling an up-to-date view of both the big picture and near-term bench strength). Most PMs manage multiple projects and resource requirements are so dynamic, however, that keeping staffing projections up-to-date becomes a dreaded chore -- and the tendency to leave the numbers unchanged begins to increase over time. Accurate staffing projections impact when we sell the teams on incoming work, however, so maintaining close scrutiny of project team resourcing plans is essential.

Estimating a team’s hours over time should become easier as a project moves along. You learn how long it takes for client stakeholders to reach consensus on a decision. You learn how fast your team can produce deliverables. You uncover potential blockers and address them. So, we expect that planned team hours will become more predictable and accurate over the course of a project. But, how do we know if staffing projections still fall into the “guestimate” category or if there’s a high confidence level in the accuracy of the numbers? We dig into the data.

The Experiment

Every now and then we analyze planned time vs. actual time to gut-check how well we’re estimating team hours week-to-week. This analysis compares the projections entered into our weekly Resource Planning Google spreadsheet (still the best tool we've found for our workflow) against actual time tracking data logged in our Harvest time tracking system. Several of us spot-check the data regularly; however, we occasionally conduct more in-depth reviews.

Cue the “Great Estimating Experiment.” This past April and May, we conducted one of these more comprehensive reviews to understand why actual hours worked on projects were lower than projections. David and Ryan, two of our Rails developers, had built an app that extracts data from Harvest to generate custom reports to meet innumerable data needs of ours. We download Harvest data in .csv form, upload it into our app, and parse the data into time entries, employees, clients, etc. We’ve named this custom Rails app “Cropduster” in keeping with the Harvest theme. (Our team has also built other Rails apps that integrate with Harvest called “Reaper” and “Oxcart” -- but, those are for another post.) We are in the custom software development business, after all, so creating useful, custom tools is par for the course here at Viget.

Each week for six consecutive weeks, I pulled the planned and actual data from Cropduster and then chased down every person who used 2 or more hours above or below the planned hours. I categorized each variance using one of 16 set explanations that we established at the beginning of the experiment. We were looking for trends and opportunities to close the gaps between the numbers.

I then tapped the talents of one of our Data and Analytics experts, Paul Koch, to slice and dice the data I’d collected and categorized. He generated nearly 20 different views of the data.

tl;dr Admittedly, six weeks isn’t a huge period of time; however, we did see positive results even in this short time frame.

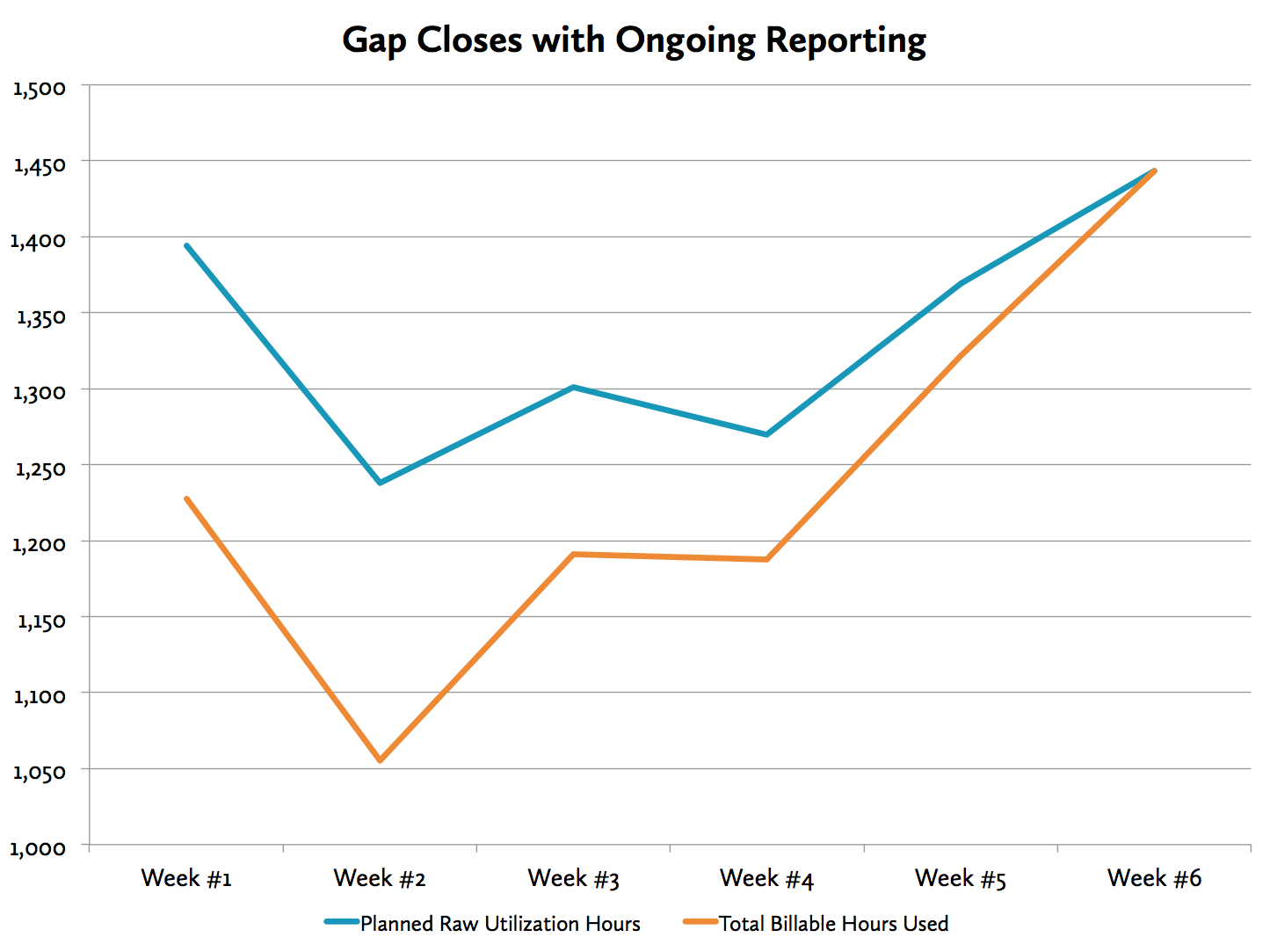

One of the more interesting graphs is the one below which shows that, over the course of the experiment, the gap between planned and actual hours closed completely after several weeks of continually narrowing the gap. Every time we’ve conducted one of these more thorough analyses, we’ve seen a similar finding. We believe the data indicates that increased scrutiny of the numbers each week leads to improved attention to estimates on the PM side, as well as increased awareness of hours expectations by team members assigned to projects.

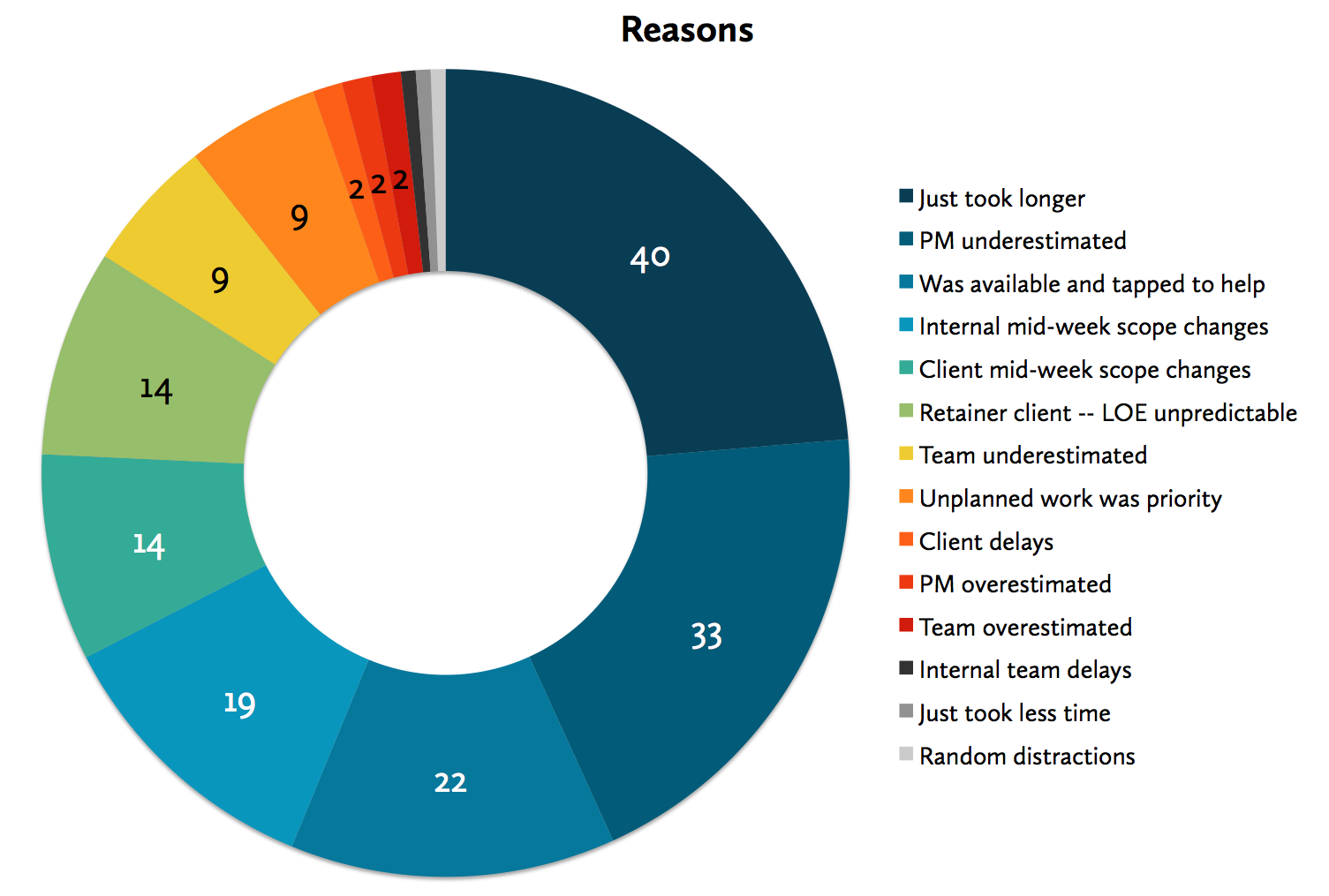

Another interesting chart was the one below which shows the most frequent explanations for using more or fewer hours than planned. This data has helped us see that 47% of the cases where we used more hours than planned resulted from internal underestimating (vs. client delays or scope changes, which accounted for only 6% of the over-use cases). This knowledge highlights an area where we can improve (and have already).

Other data views show us which projects tended to be over- or under-estimated in terms of team hours, which projects tended to run closely to planned projections, as well as which Project Managers tended to more frequently over- or under-estimate hours, among other findings. Of course, the data in isolation doesn’t convey the nuances behind the explanations. And, the data doesn’t account for multiple explanations for a variance (when given multiple reasons, I picked the strongest influencer). We’ll refine our set of explanations during our next analysis to dig in more granularly and to account for multiple explanations.

In summary, shining a light on discrepancies, getting the team to pay more attention to planned hours, and then executing those hours can dramatically impact the accuracy of team resource projections -- improving your ability to know when you will have a team available for a new assignment.