Planning for Scalability

Lawson Kurtz, Former Senior Developer

Article Category:

Posted on

We recently launched The Puppy Bowl Fantasy Draft. Most apps that we launch experience their share of traffic spikes, but this app was unique. The event-oriented nature and popularity posed some serious scalability challenges.

Without getting into details (that we're legally prohibited from sharing), historical traffic patterns for the app look roughly like this.

So how do we keep the app running smoothly when the app hits that A TON users mark?

For too many apps, scaling is an afterthought. When you don't plan for scale from the very beginning, there's very little choice other than adding more and more servers to handle the scale of the application traffic. In certain circumstances, this might work well, but servers and the people that configure and manage them are expensive. Running more servers means fewer dollars in your pockets at the end of the day.

But with a little smarts and up-front planning, you can dramatically reduce the resources ($$$) consumed by your app, all while increasing your app's performance.

Planning for Scale

Scalability was an important consideration from the very beginning of our engagement with Discovery. Here are three strategies we put in place from the very beginning to keep the Puppy Bowl Fantasy Draft up and running smoothly.

Make the users' computers do the work.

Their user experience benefits aside, client-side apps can be terrific for scalability if you choose not to render them on a server.

Traditionally, web applications are rendered on the server, meaning a server does the work of creating a page to send to a visitor's browser. This is desirable for a number of reasons, but scalability is not one of them.

When your application is primarily a client-side app, you have the option of making your server quickly build nearly-blank pages that include just enough to get your app running in the visitor's browser. The visitor's browser then, and not our server, does all the work to render the page with which the visitor interacts.

And just as (or more) importantly than the matter of saving computing resources by avoiding rendering on the server, the nearly-blank pages provided by the server are so generic that they can be aggressively cached with extremely high hit rates (more on that below), something that can be quite challenging with server-rendered apps.

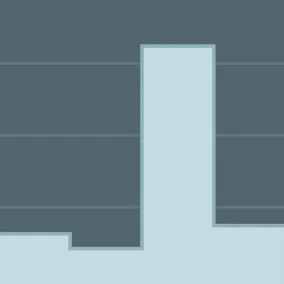

A page from our app as rendered by our server:

While it might look blank, I assure you it's not! Included on the page is just enough information to allow our pages to be shared and searched. We do just enough work to play nicely with social networks and search engines. This is what social networks and search engines see on that "blank" page.

Don't do the same work twice.

If your server ever does the same work twice, you're wasting server resources. We avoid this waste by caching responses. When our server goes through the effort of providing a visitor with the page or asset they have requested, we remember what that response was so that we don't ever have to ask the server for it again.

Caching takes many forms, but often the best for scalability is using a proxy caching service like Cloudflare. In a proxy cache setup, the proxy operator (Cloudflare in our case) receives all of the inbound requests to our domain, before our application ever sees them. If Cloudflare hasn't seen a particular request before, it sends the request along to our app servers, and stores the response on the way back. When Cloudflare recognizes the request, it simply responds with the former response, without ever having to ask our app servers to do any work.

This means the vast majority of visitors will never actually interact with our application server. The result of this is that our server only ever has to do work for new requests.

As mentioned above, caching is made much easier by the fact that we don't render personalized UI from our server. Since responses are generic, we can provide cached responses in more circumstances.

Beyond proxy-caching, we can also instruct visitors' browsers to cache our application assets. The next time these visitors visit our app, these assets are served directly and instantly from the visitor's own computer.

Aggressive proxy caching means bored servers...

Use professional data infrastructure services.

The Puppy Bowl Fantasy Draft includes some data-intensive functionality including real-time updating of puppy stats on game day, that necessitate the use of advanced, scalable data infrastructure. But building and managing data infrastructure for scale is complex.

Fortunately, some people spend their entire lives solving these exact problems. Companies like Firebase and Parse (widely referred to as Backends as a Service) excel at handling data infrastructure demands of large scale apps. For companies without dedicated DataOps teams, using these services can be far simpler, cheaper, and more reliable than managing their own scalable data infrastructure.

We make extensive use of Firebase for the Puppy Bowl Fantasy Draft application's data needs.

With a little planning, it's possible to build applications that scale both reliably and inexpensively. And even if you never anticipate serving millions of users, adding consideration of scalability best-practices to your process can go a long way towards reducing your on-going infrastructure costs at any scale.

And if you need an app that scales, or want to scale your existing app, but don't know where to start, let us know!